Distillate is a tool for people who use WebRTC for business calls. With Distillate, you can receive a list of keywords for each conversation you had. So you can remind yourself what you discussed, when.

A few months ago I attended the TADHack London mini-hack. A fantastic hackathon that brings people working in programmable telecoms together. The London mini-hack is a side-event to the main global TADHack hackathon which is later in the year. The mini-hack focuses on WebRTC, with the goal of showcasing some of the outcome at the London WebRTC conference the following week.

Although I didn’t join the hacking for the last London mini-hack, I did attend the awards ceremony. If I was to compare this event to the last one, my main takeaway is that WebRTC has matured for end users since last year. Aside from exploring the limits of what’s possible with the technology, people this year decided to focus much more on solving real problems WebRTC users are experiencing. Which is great news!

Aside from WebRTC, there were also many people working on chat systems. Cisco Spark and Matrix both participated in the event.

Generating an idea

When I arrived at IDEALondon I had decided I wanted to perform some form of speech-to-text. But I wasn’t too sure what to do. While mulling over options, I ended up meeting Alexandre François. We both discussed a few ideas of things we each wanted to play with. And we came up with an idea.

Why not try passing the recording of a WebRTC call to the IBM Watson engine to see what interesting information we could get out of it?

Capturing the data

The first challenge for us was to capture a recording of a conversation on WebRTC. WebRTC is a peer-to-peer technology and will attempt to stream data from one participant to another without relaying the data. In the case where it can’t perform a direct connection between peers it relays the data through a server. As it is end-to-end encrypted it is not possible for the relay server access to the raw data.

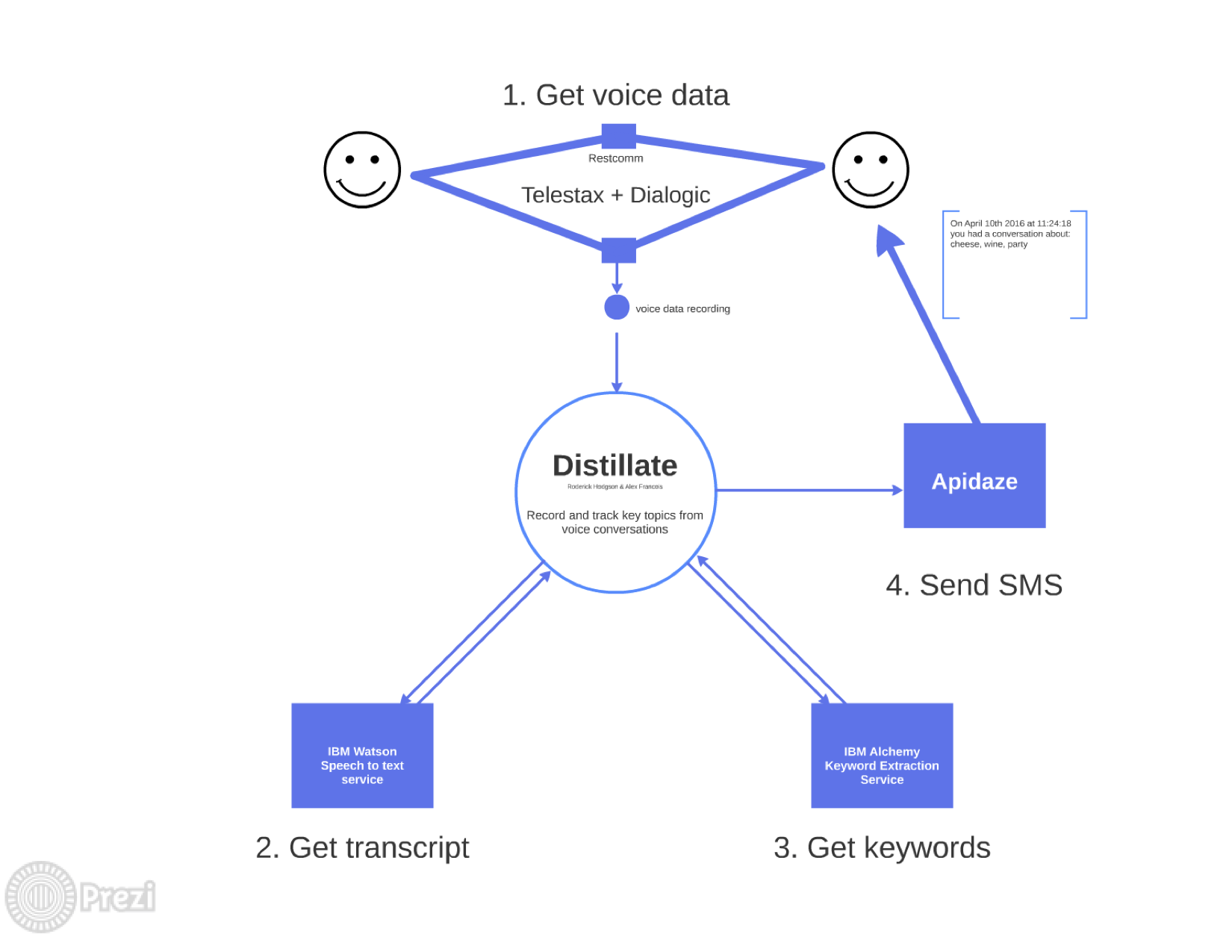

The most sensible solution for capturing the data in a call between Alice and Bob, was to have a recording service act as a participant in the call. Alice calls the service, the service answers the call from Alice, and establishes another call to Bob. The service forwards the data from the each call so both can hear one-another.

To do so, we made use of the Telestax Restcomm with functionality powered by Dialogic’s PowerMedia XMS. Using that, we created a SIP endpoint that connected the two WebRTC identities in the system. Through the Restcomm interface, we requested the endpoint generate a URL for a recording, and send that to a RESTful service at the end of a call.

Processing

We wrote a Node.js REST server that was able to receive the request from the Restcomm interface. This service worked through the following pipeline:

- Retrieve the recording

- Pass the recording to IBM Watson for transcription of the voice data

- Call IBM Watson’s keyword API to retrieve keywords for the transcript, in order of importance.

Through a few simple RESTful requests we were able to deliver an ordered set of keywords to the user. Without having to write any of the speech-to-text or keyword generation heavy-lifting ourselves.

With this information we then were able to call Apidaze’s API to send the “top keywords” to the user, via SMS.

Deployment

With most of the work done by IBM Watson, we just had a simple Node.js server to host online. We placed the Node.js server in a micro AWS instance. We automated the deployment using Ansible, to make it repeatable and easy to update. This was also using a Node.js ansible “role”, to minimise the amount of effort required to get up and running in a stable way.

With that in place, we were able to give a live demo during our 5 minute presentation.

Conclusion

Working with IBM Watson’s API helped me understand how far cloud services have gone for providing machine learning-based tools for everyday operations. This ended up being a simple project development-wise, given the amount of functionality provided by the hack. In fact, had we used something like Node-RED to drag-and-drop the data connections between the web APIs, we may not have had to write a single line of code at all.

This TAD-Hack was an amazing opportunity to discuss ideas with people working in programmable telecoms. If you’re in any way interested in the future of communication, WebRTC and chat services, do check out the next event.